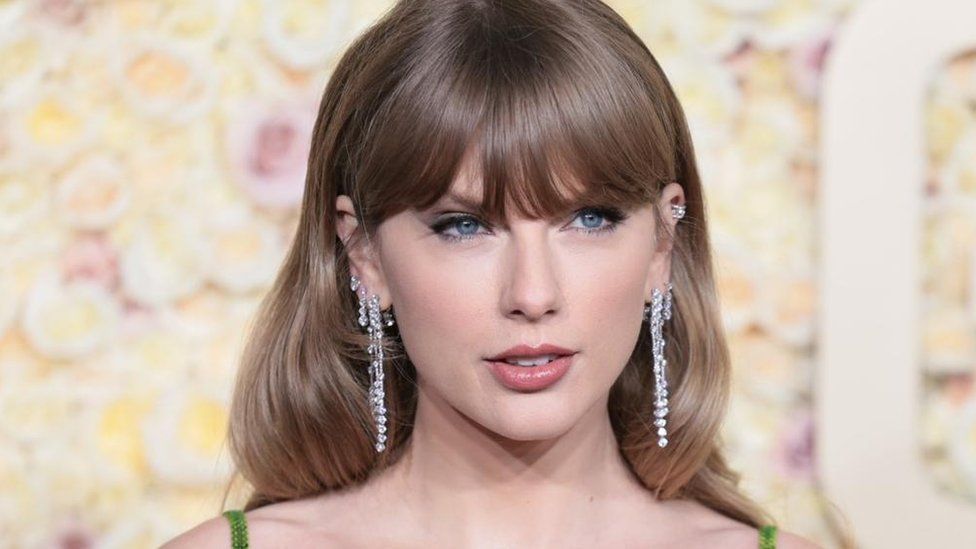

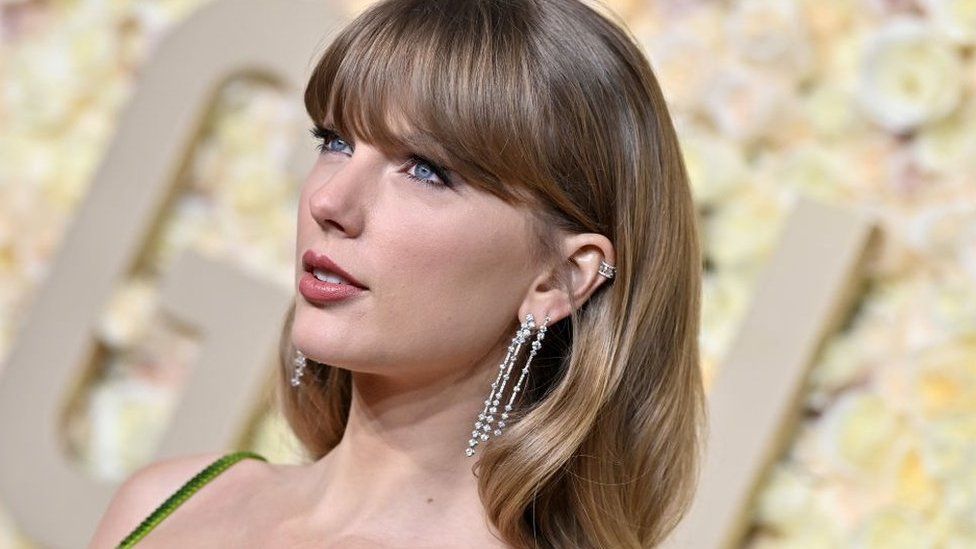

X blocks searches for Taylor Swift after express AI photographs of her go viral

Social media platform X has blocked searches for Taylor Swift after express AI-generated photographs of the singer started circulating on the location.

In a press release to the BBC, X’s head of enterprise operations Joe Benarroch mentioned it was a “temporary action” to prioritise security.

When trying to find Swift on the location, a message seems that claims: “Something went wrong. Try reloading.”

Fake graphic photographs of the singer appeared on the location earlier this week.

Some went viral and have been considered hundreds of thousands of instances, prompting alarm from US officers and followers of the singer.

Posts and accounts sharing the faux photographs have been flagged by her followers, who populated the platform with actual photographs and movies of her, utilizing the phrases “protect Taylor Swift”.

The photographs prompted X, previously Twitter, to launch a press release on Friday, saying that posting non-consensual nudity on the platform is “strictly prohibited”.

“We have a zero-tolerance policy towards such content,” the assertion mentioned. “Our teams are actively removing all identified images and taking appropriate actions against the accounts responsible for posting them.”

It is unclear when X started blocking searches for Swift on the location, or whether or not the location has blocked searches for different public figures or phrases previously.

In his electronic mail to the BBC, Mr Benarroch mentioned the motion is finished “with an abundance of caution as we prioritise safety on this issue”.

The problem caught the eye of the White House, who on Friday known as the unfold of the AI-generated photographs “alarming”.

“We know that lax enforcement disproportionately impacts women and they also impact girls, sadly, who are the overwhelming targets,” mentioned White House press secretary Karine Jean-Pierre throughout a briefing.

She added that there must be laws to deal with the misuse of AI expertise on social media, and that platforms also needs to take their very own steps to ban such content material on their websites.

“We believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation and non-consensual, intimate imagery of real people,” Ms Jean-Pierre mentioned.

US politicians have additionally known as for brand spanking new legal guidelines to criminalise the creation of deepfake photographs.

Deepfakes use synthetic intelligence to make a video of somebody by manipulating their face or physique. A research in 2023 discovered that there was a 550% rise within the creation of doctored photographs since 2019, fuelled by the emergence of AI.

There are presently no federal legal guidelines towards the sharing or creation of deepfake photographs, although there have been strikes at state degree to deal with the difficulty.

In the UK, the sharing of deepfake pornography turned unlawful as a part of its Online Safety Act in 2023.

Related Topics

-

-

1 day in the past

![Taylor Swift]()

-

-

-

5 days in the past

![Taylor Swift at 2024 Golden Globes awards]()

-